The content is based on the original article by author Matt Welsh on medium.com.

Please follow the original article at the following link: The End of Programming

All of the art in this post was generated by DALL-E 2.

I came of age in the 1980s, programming personal computers like the Commodore VIC-20 and Apple IIe at home. Going on to study Computer Science in college and ultimately getting a PhD at Berkeley, the bulk of my professional training was rooted in what I will call “classical” CS: programming, algorithms, data structures, systems, programming languages.

In old-style CS, the main goal is to turn an idea into a program written by a person - code in a language like Java, C++, or Python. Every idea in Classical CS — no matter how complex or sophisticated — from a database join algorithm to the mind-bogglingly obtuse Paxos consensus protocol — can be expressed as a human-readable, human-comprehendible program.

When I was in college in the early 90s, AI (artificial intelligence) wasn't very popular. My first research job at Cornell was with Dan Huttenlocher, who knew a lot about how computers see things (he's now in charge of computing at MIT). In Dan’s PhD-level computer vision course in 1995 or so, we never once discussed anything resembling deep learning or neural networks - it was all old-style ways of doing things, like finding edges in pictures or measuring how different shapes are. Deep learning was very new then, and people didn't think it was important.

Of course, this was 30 years ago, and a lot has changed. But one thing that hasn't changed much is that Computer Science is still taught with a focus on data structures, algorithms, and programming. I think in 30 years, or even 10 years, we won't be teaching CS this way anymore. In fact, I think CS is going to change a lot, and most of us aren't ready for it.

Programming will be obsolete

I think the usual idea of "writing a program" will soon be gone. For most things, instead of software as we know it, we'll have AI systems that are trained, not programmed. When we need a "simple" program (after all, not everything needs a huge AI running on many computers), those programs will be made by an AI, not written by hand.

I don't think this idea is crazy. The first Computer Scientists, coming from the simpler world of Electrical Engineering, probably thought all future Computer Scientists would need to know a lot about how computer chips work, how to do math with just 1s and 0s, and how to design the brains of computers. But today, I bet 99% of people who are writing software have almost no clue how a CPU actually works, let alone the physics underlying transistor design. In the same way, I think the Computer Scientists of the future will be so far removed from the classic definitions of “software” that they would be hard-pressed to reverse a linked list or implement Quicksort. (Hell, I’m not sure I remember how to implement Quicksort myself.)

AI coding assistants like CoPilot are only scratching the surface of what I’m talking about. It seems totally obvious to me that of course all programs in the future will ultimately be written by AIs, with humans relegated to, at best, a supervisory role.

If you don't believe this, just look at how fast AI is getting better at making images. The difference in quality and complexity between DALL-E v1 and DALL-E v2 — announced only 15 months later — is amazing. If I have learned anything over the last few years working in AI, it is that it is very easy to underestimate the power of increasingly large AI models. Things that seemed like science fiction only a few months ago are rapidly becoming reality.

So I'm not just talking about CoPilot replacing programmers. I'm talking about replacing the entire concept of writing programs with training models.

In the future, CS students aren’t going to need to learn such mundane skills as how to add a node to a binary tree or code in C++. That kind of teaching will be old-fashioned, like teaching engineering students how to use an old calculator.

Future engineers will quickly start up a very big AI model that already knows everything humans know (and more), ready to do any task. The main work will be about finding the right examples, the right training data, and the right ways to check the training process. Good models that can learn from just a few examples will only need a few good examples of the task. Big datasets made by humans won't be needed most of the time, and most people "training" an AI model won't be doing complex math, or anything like it. They will be teaching by example, and the machine will do the rest.

In this New Computer Science — if we even call it Computer Science at all — the machines will be so powerful and already know how to do so many things that the field will look less like engineering and more like education; that is, how to best teach the machine, kind of like how we teach children in school. But unlike children, these AI systems will be flying our airplanes, running our power grids, and possibly even governing entire countries. I would argue that most of old CS becomes irrelevant when we focus on teaching intelligent machines rather than directly programming them. Programming, as we know it now, will in fact be dead.

How does all of this change how we think about the field of Computer Science?

The new atomic unit of computation becomes not a processor, memory, and I/O system implementing a von Neumann machine, but rather a massive, pre-trained, highly adaptive AI model.

This is a seismic shift in the way we think about computation — not as a predictable, static process, governed by instruction sets, type systems, and notions of decidability. AI-based computation has long since crossed the Rubicon of being amenable to static analysis and formal proof. We are rapidly moving towards a world where the fundamental building blocks of computation are temperamental, mysterious, adaptive agents.

This shift is underscored by the fact that nobody actually understands how large AI models work.

People are publishing research papers actually discovering new behaviors of existing large models, even though these systems have been “engineered” by humans. Large AI models are capable of doing things that they have not been explicitly trained to do, which should scare the shit out of Nick Bostrom and anyone else worried (rightfully) about an superintelligent AI running amok. We currently have no way, apart from empirical study, to determine the limits of current AI systems. As for future AI models that are orders of magnitude larger and more complex — good friggin’ luck!

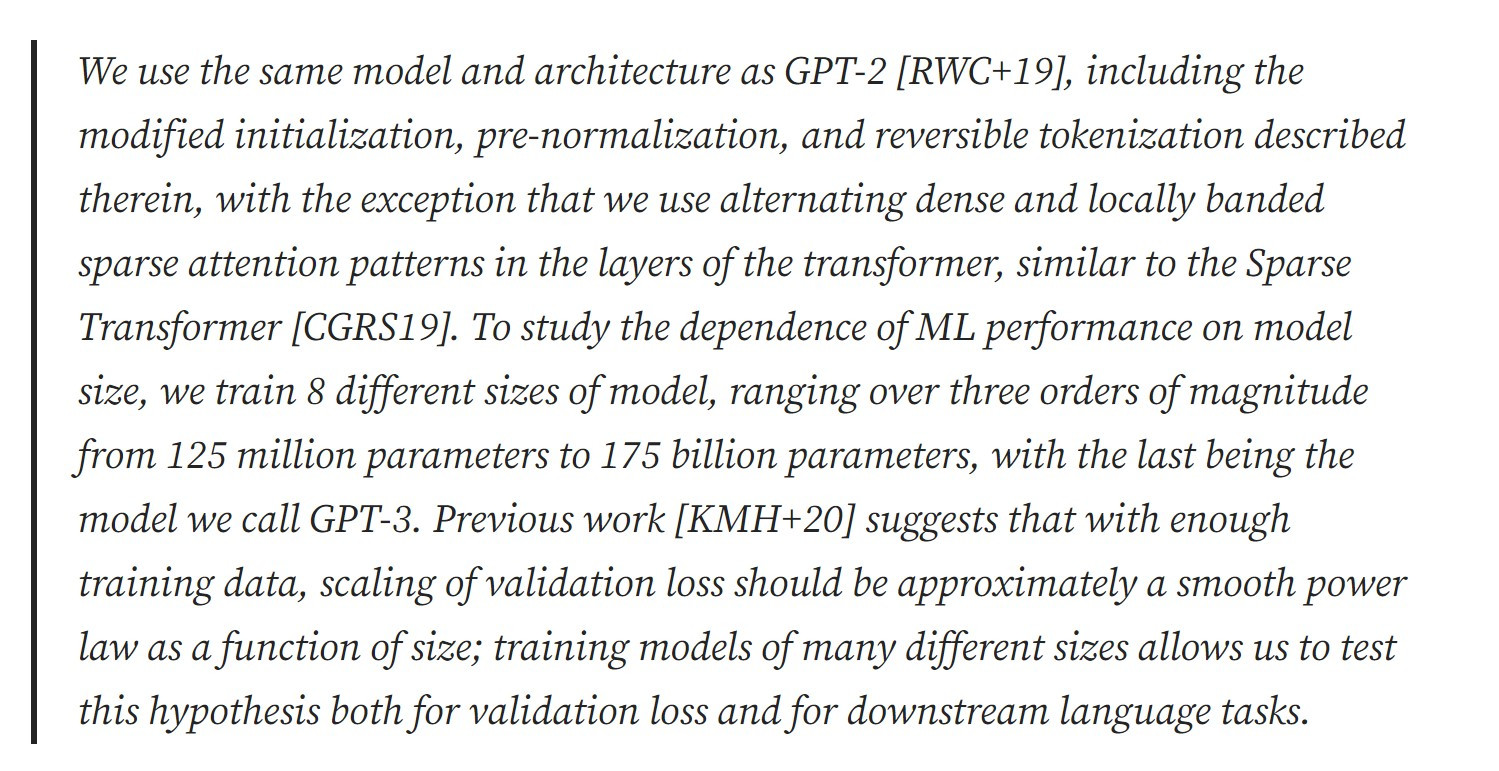

The shift in focus from programs to models should be obvious to anyone who has read any modern machine learning papers. These papers barely mention the code or systems underlying their innovations; the building blocks of AI systems are much higher-level abstractions like attention layers, tokenizers, and datasets. A time traveller from even 20 years ago would have a hard time making sense of the three sentences in the (75-page-long!) GPT-3 paper that describe the actual software that was built for the model:

This shift in the underlying definition of computing presents a huge opportunity, and plenty of huge risks. Yet I think it’s time to accept that this is a very likely future, and evolve our thinking accordingly, rather than just sit here waiting for the meteor to hit.

0 Comments